One of the things that can be difficult when starting with a new technology, framework or tool is where to get started. That “get started” can mean a great many things to many people. Over the past 6 months or so, I’ve been learning and deploying Rust into production in AWS. I’ve gone back and forth on my workflow and wanted to put together a Serverless Rust Developer Experience article. As you begin with Rust and Serverless, this should give you some good places to get started.

Category: Programming

How to Build with Rust and Lambda

Rust and Lambda are new friends. Sure, there’s a great deal of momentum lately around Rust but the language has been around for almost 20 years. It struggled to take off early on but has seen its adoption increase since the creation of the Rust Foundation in 2021

AWS among many others has adopted the language for mission-critical workloads that require blazing fast performance, type-safety and solid developer experience. AWS believes so much in the language that it has built components in some of its stalwart services like S3, Cloudfront, EC2 and Lambda including the microVM technology Firecracker.

I’ve been working with Rust for the better part of 6 months which gives me just enough experience to highlight the things I like and have struggled with when building Lambdas. I believe that if you can get over the hurdle of learning Rust, you’ll gain some amazing benefits that outweigh the challenges of “getting started”. Building Lambdas with Rust.

Partitioned S3 Bucket from DynamoDB

I’ve been working recently with some data that doesn’t naturally fit into my AWS HealthLake datastore. I have some additional information captured in a DynamoDB table that would be useful to blend with HealthLake but on its own is not an FHIR resource. I pondered on this for a while and came up with the idea of piping DynamoDB stream changes to S3 so that I could then pick up with AWS Glue. In this article, I want to show you an approach to building a partitioned S3 bucket from DynamoDB. Refining that further with Glue jobs, tables and crawlers will come later.

Consuming an SQS Event with Lambda and Rust

I’ve been trying to learn Rust for the better part of this year. My curiosity peaked a few years back when I learned the AWS-led Firecracker was developed with the language. And I’ve continued to want to learn it ever since. Fast-forward and I’m jumping both feet in. That’s usually how I work. I must admit that right now, I’m the most noob of noobs, but that’s not going to keep me from sharing what I’m up to and what I’m learning. For me, this blog is as much about sharing as it is about learning and communicating to those reading that it’s OK to be where you are in your journey. There are no straight lines. Only periods of growth and plateaus. In this article, I’ll walk you through consuming an SQS Event with Lambda and Rust.

WebSocket with AWS API Gateway

I was working recently with some backend code and I needed to communicate the success or failure of the result back to my UI. I instantly knew that I needed to put together a WebSocket to handle this interaction between the backend and the front end. With all the Serverless and non-Serverless options out there though, which way do I go? How about plain old WebSockets with AWS API Gateway and Serverless?

DynamoDB Streams EventBridge Pipes Multiple Items

I’ve written a few articles lately on EventBridge Pipes and specifically around using them with DynamoDB Streams. I’ve written about Enrichment. And I’ve written about just straight Streaming. I believe that using EventBridge Pipes plays a nice part in a Serverless, Event-Driven approach. So in this article, I want to explore Streaming DynamoDB to EventBridge Pipes with multiple items in one table.

Several of the comments I received about Streaming DynamoDB to EventBridge Pipes were around, “What if I have multiple item collections in the same table?”. I intend to show a pattern for handling that exact problem in this article. At the bottom, you’ll find a working code sample that you can deploy and build on top of. I’ve used this exact setup in production, so rest assured that this is a great base to start from.

AWS Step Functions Callback Pattern

Some operations in a system function asynchronously. Many times, those same operations must also happen to be responsible for coordinating external workflows to provide an overall status on the execution of the main workflow. A natural fit for this problem with AWS is to use Step Functions and make use of the Callback pattern. In this article, I’m going to walk through an example of the Callback pattern while using AWS’ HealthLake and its export capabilities as the backbone for the async job. Welcome to the AWS Step Functions Callback Pattern.

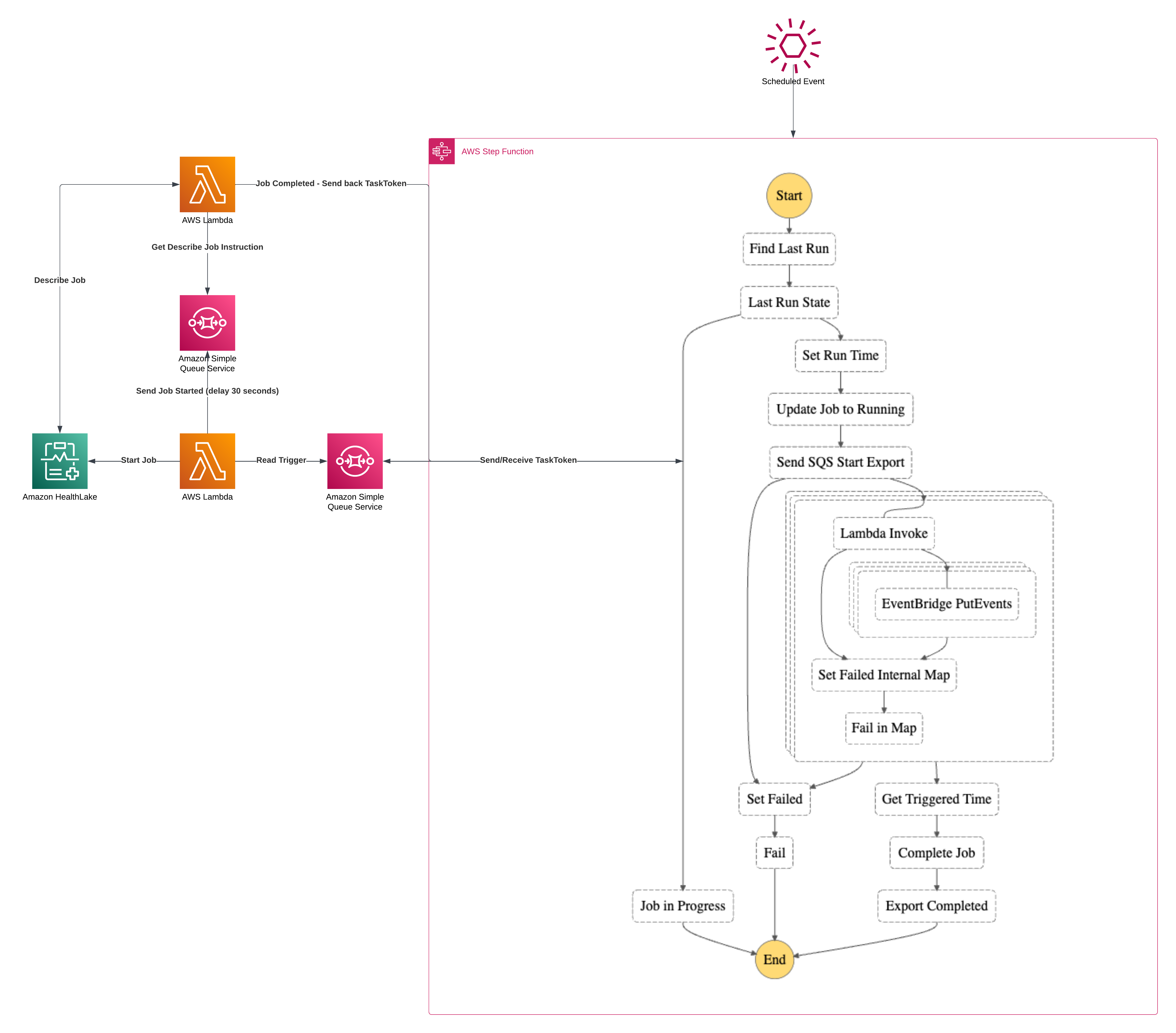

Callback Workflow Solution Architecture

Let’s first start with the overarching architecture diagram. The general premise of the solution is that AWS’ HealthLake allows the export of all resources “since the last time”. By using Step Functions, Lambdas, SQS, DynamoDB, S3, Distributed Maps and EventBridge I’m going to build the ultimate Serverless Callback workflow. I feel like outside of Kinesis and SNS, I’ve touched them all in this one.

There’s quite a bit going on in here so I’m going to break it down into segments which will be:

- Triggering the State Machine

- Record Keeping and Run Status

- Running the Export and initiating the Callback

- Polling the Export and Restarting the State Machine

- Working the results

- Wrapping Up

- Dealing with Failure

Hang tight, there’s going to be a bunch of code and lots of detail. If you want to jump to code, it’s down at the bottom here

Infrastructure as Code

Infrastructure as Code is an emerging practice that encourages the writing of cloud infrastructure as code instead of clicking your way to deployment. I feel like “ClickOps” is where we all started years ago when there weren’t any other options. The lessons learned from the inconsistency in human deployment were the genesis for the automation and power that comes from building your cloud stacks as code. Now, many start from IaC as the patterns and practices are well-defined. But instead of re-hashing those commentaries, I want to give you my opinions on why IaC decisions are more than about the tech. Infrastructure as Code is a shift of responsibilities that brings your teams closer together and will help establish a culture of accountability but it will come at a cost.

Golang Private Module with CDK CodeBuild

Even experienced builders run into things from time to time that they haven’t seen before and this causes them some trouble. I’ve been working with CDK, CodePipeline, CodeBuild and Golang for several years now and haven’t needed to construct a private Golang module. That changed a few weeks ago and it threw me, as I needed to also include it in a CodePipeline with a CodeBuild step. This article is more documentation and reference for the future, as I want to share the pattern learned for building Golang private modules with CodeBuild.

Building Golang Lambda Functions

Using CDK for building Golang Lambda functions is a super simple process and easy enough to work with. It is well documented and is a subclass of the Function class defined in aws-cdk-lib/aws-lambda. Unsure about CDK or what it can do for you? Have a read here to get started and see what all the fuss is about.